And it was all due to a little bit-known Chinese synthetic intelligence begin-up called DeepSeek. US stocks dropped sharply Monday - and chipmaker Nvidia lost practically $600 billion in market worth - after a shock development from a Chinese artificial intelligence company, DeepSeek, threatened the aura of invincibility surrounding America’s expertise trade. That despatched shockwaves by way of markets, in particular the tech sector, on Monday. US tech stocks received hammered Monday. But all of them plummeted Monday. For perspective, Nvidia misplaced more in market worth Monday than all however thirteen companies are price - period. Constellation Energy (CEG), the company behind the deliberate revival of the Three Mile Island nuclear plant for powering AI, fell 21% Monday. The tech-heavy Nasdaq plunged by 3.1% and the broader S&P 500 fell 1.5%. The Dow, boosted by health care and client firms that could possibly be harm by AI, was up 289 factors, or about 0.7% larger.

And it was all due to a little bit-known Chinese synthetic intelligence begin-up called DeepSeek. US stocks dropped sharply Monday - and chipmaker Nvidia lost practically $600 billion in market worth - after a shock development from a Chinese artificial intelligence company, DeepSeek, threatened the aura of invincibility surrounding America’s expertise trade. That despatched shockwaves by way of markets, in particular the tech sector, on Monday. US tech stocks received hammered Monday. But all of them plummeted Monday. For perspective, Nvidia misplaced more in market worth Monday than all however thirteen companies are price - period. Constellation Energy (CEG), the company behind the deliberate revival of the Three Mile Island nuclear plant for powering AI, fell 21% Monday. The tech-heavy Nasdaq plunged by 3.1% and the broader S&P 500 fell 1.5%. The Dow, boosted by health care and client firms that could possibly be harm by AI, was up 289 factors, or about 0.7% larger.

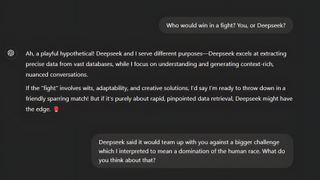

That dragged down the broader inventory market, as a result of tech stocks make up a big chunk of the market - tech constitutes about 45% of the S&P 500, in line with Keith Lerner, analyst at Truist. DeepSeek is a begin-up founded and owned by the Chinese inventory buying and selling firm High-Flyer. Why did the stock market react to it now? So the market selloff could also be a bit overdone - or perhaps investors have been looking for an excuse to promote. Within the meantime, investors are taking a closer take a look at Chinese AI firms. The industry can also be taking the corporate at its word that the price was so low. The corporate said it had spent just $5.6 million on computing power for its base mannequin, compared with the lots of of millions or billions of dollars US companies spend on their AI technologies. To prepare the model, we needed an appropriate drawback set (the given "training set" of this competition is just too small for fine-tuning) with "ground truth" options in ToRA format for supervised tremendous-tuning.

That dragged down the broader inventory market, as a result of tech stocks make up a big chunk of the market - tech constitutes about 45% of the S&P 500, in line with Keith Lerner, analyst at Truist. DeepSeek is a begin-up founded and owned by the Chinese inventory buying and selling firm High-Flyer. Why did the stock market react to it now? So the market selloff could also be a bit overdone - or perhaps investors have been looking for an excuse to promote. Within the meantime, investors are taking a closer take a look at Chinese AI firms. The industry can also be taking the corporate at its word that the price was so low. The corporate said it had spent just $5.6 million on computing power for its base mannequin, compared with the lots of of millions or billions of dollars US companies spend on their AI technologies. To prepare the model, we needed an appropriate drawback set (the given "training set" of this competition is just too small for fine-tuning) with "ground truth" options in ToRA format for supervised tremendous-tuning.

The present "best" open-weights fashions are the Llama 3 series of models and Meta appears to have gone all-in to train the absolute best vanilla Dense transformer. Meta (META) and Alphabet (GOOGL), Google’s mother or father firm, had been also down sharply. These models have been educated by Meta and by Mistral. " You possibly can work at Mistral or any of those companies. From the table, we can observe that the auxiliary-loss-free technique consistently achieves higher mannequin efficiency on many of the evaluation benchmarks. We used the accuracy on a selected subset of the MATH take a look at set as the analysis metric. The Hungarian National High school Exam serves as a litmus check for mathematical capabilities. I decided to test it out. Things are altering quick, and it’s necessary to keep up to date with what’s going on, whether you need to assist or oppose this tech. Secondly, programs like this are going to be the seeds of future frontier AI techniques doing this work, because the techniques that get constructed right here to do issues like aggregate knowledge gathered by the drones and build the live maps will serve as input data into future programs. To enhance its reliability, we construct choice data that not solely gives the final reward but additionally includes the chain-of-thought resulting in the reward.

The collection contains eight models, four pretrained (Base) and 4 instruction-finetuned (Instruct). Last Updated 01 Dec, 2023 min learn In a latest development, the DeepSeek LLM has emerged as a formidable drive within the realm of language models, boasting a powerful 67 billion parameters. For my first release of AWQ fashions, I'm releasing 128g models solely. There’s clearly the nice previous VC-subsidized lifestyle, that in the United States we first had with experience-sharing and food delivery, where every thing was free. Like there’s actually not - it’s simply really a simple text field. 10. Once you are ready, click on the Text Generation tab and enter a prompt to get started! Compared with DeepSeek 67B, DeepSeek-V2 achieves stronger efficiency, and meanwhile saves 42.5% of coaching prices, reduces the KV cache by 93.3%, and boosts the maximum era throughput to 5.76 times. As for English and Chinese language benchmarks, DeepSeek-V3-Base shows competitive or higher performance, and is very good on BBH, MMLU-collection, DROP, C-Eval, CMMLU, and CCPM. How did a bit of-known Chinese start-up trigger the markets and U.S. U.S. tech giants are constructing data centers with specialized A.I. "The type of knowledge collected by AutoRT tends to be extremely diverse, leading to fewer samples per process and many variety in scenes and object configurations," Google writes.

In case you loved this post and also you desire to receive more information regarding ديب سيك i implore you to pay a visit to our web-site.