DeepSeek AI, a Chinese AI startup, has announced the launch of the DeepSeek LLM household, a set of open-supply massive language models (LLMs) that achieve remarkable results in numerous language duties. DeepSeek differs from other language fashions in that it's a collection of open-source giant language models that excel at language comprehension and versatile software. The startup supplied insights into its meticulous information collection and coaching course of, which centered on enhancing variety and originality whereas respecting intellectual property rights. Generating synthetic information is more resource-efficient in comparison with conventional training methods. Higher clock speeds also improve prompt processing, so intention for 3.6GHz or extra. In DeepSeek you simply have two - DeepSeek-V3 is the default and in order for you to make use of its advanced reasoning model you must tap or click on the 'DeepThink (R1)' button before entering your prompt. It’s laborious to filter it out at pretraining, particularly if it makes the mannequin better (so that you might want to turn a blind eye to it). DeepSeek might show that turning off access to a key technology doesn’t essentially imply the United States will win.

Regardless of the case could also be, developers have taken to DeepSeek’s models, which aren’t open source as the phrase is usually understood but are available below permissive licenses that enable for industrial use. Why this is so spectacular: The robots get a massively pixelated picture of the world in entrance of them and, nonetheless, are in a position to robotically study a bunch of refined behaviors. Why this matters - scale might be a very powerful factor: "Our models show sturdy generalization capabilities on quite a lot of human-centric duties. These evaluations successfully highlighted the model’s distinctive capabilities in dealing with previously unseen exams and duties. It additionally demonstrates exceptional talents in coping with beforehand unseen exams and duties. Another notable achievement of the DeepSeek LLM household is the LLM 7B Chat and 67B Chat fashions, which are specialized for conversational duties. The DeepSeek LLM family consists of four models: DeepSeek LLM 7B Base, DeepSeek LLM 67B Base, DeepSeek LLM 7B Chat, and DeepSeek 67B Chat.

Regardless of the case could also be, developers have taken to DeepSeek’s models, which aren’t open source as the phrase is usually understood but are available below permissive licenses that enable for industrial use. Why this is so spectacular: The robots get a massively pixelated picture of the world in entrance of them and, nonetheless, are in a position to robotically study a bunch of refined behaviors. Why this matters - scale might be a very powerful factor: "Our models show sturdy generalization capabilities on quite a lot of human-centric duties. These evaluations successfully highlighted the model’s distinctive capabilities in dealing with previously unseen exams and duties. It additionally demonstrates exceptional talents in coping with beforehand unseen exams and duties. Another notable achievement of the DeepSeek LLM household is the LLM 7B Chat and 67B Chat fashions, which are specialized for conversational duties. The DeepSeek LLM family consists of four models: DeepSeek LLM 7B Base, DeepSeek LLM 67B Base, DeepSeek LLM 7B Chat, and DeepSeek 67B Chat.

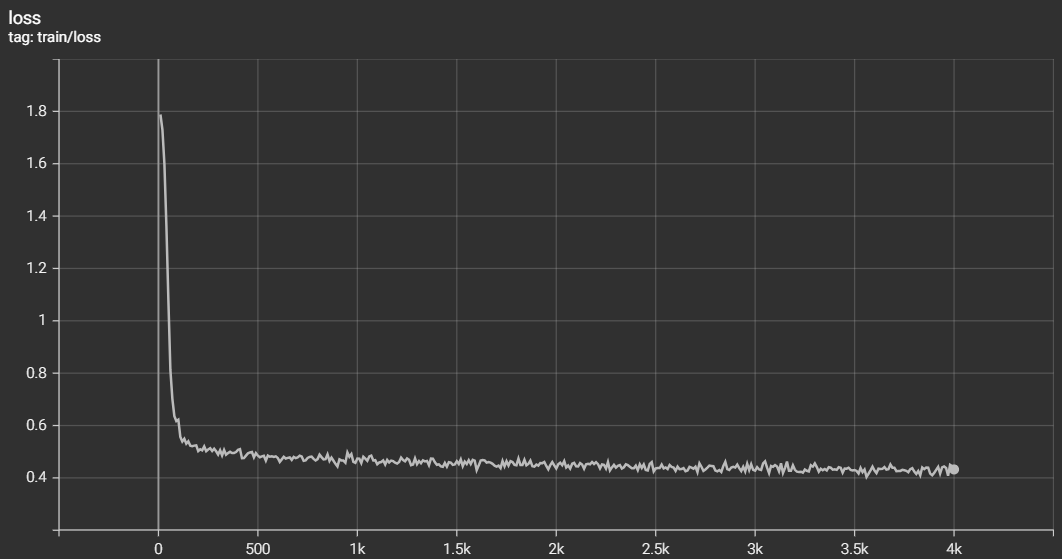

One of the primary options that distinguishes the DeepSeek LLM family from different LLMs is the superior efficiency of the 67B Base model, which outperforms the Llama2 70B Base model in several domains, similar to reasoning, coding, mathematics, and Chinese comprehension. In key areas equivalent to reasoning, coding, arithmetic, and Chinese comprehension, LLM outperforms different language fashions. These massive language fashions need to load fully into RAM or VRAM every time they generate a new token (piece of textual content). The training regimen employed massive batch sizes and a multi-step studying charge schedule, ensuring sturdy and efficient studying capabilities. The 67B Base model demonstrates a qualitative leap within the capabilities of DeepSeek LLMs, exhibiting their proficiency across a variety of applications. I've been building AI functions for the past four years and contributing to main AI tooling platforms for a while now. Remember, while you'll be able to offload some weights to the system RAM, it's going to come at a efficiency value. The 7B mannequin utilized Multi-Head consideration, while the 67B mannequin leveraged Grouped-Query Attention.

The LLM was educated on a large dataset of two trillion tokens in each English and Chinese, employing architectures corresponding to LLaMA and Grouped-Query Attention. It also scored 84.1% on the GSM8K mathematics dataset without high-quality-tuning, exhibiting outstanding prowess in solving mathematical issues. To make sure unbiased and thorough performance assessments, DeepSeek AI designed new drawback units, such as the Hungarian National High-School Exam and Google’s instruction following the analysis dataset. Chinese state media praised DeepSeek as a national asset and invited Liang to fulfill with Li Qiang. Italy’s data protection agency has blocked the Chinese AI chatbot DeekSeek after its developers didn't disclose the way it collects consumer knowledge or whether it is saved on Chinese servers. The authority’s choice - aimed at protecting Italian users’ knowledge - came after the Chinese firms that supply chatbot service to DeepSeek provided information that "was considered to totally inadequate," the authority stated in a note on its website.