In recent times, it has turn out to be best recognized because the tech behind chatbots such as ChatGPT - and DeepSeek - often known as generative AI. Last Updated 01 Dec, 2023 min learn In a recent growth, the DeepSeek LLM has emerged as a formidable pressure in the realm of language models, boasting a powerful 67 billion parameters. Why this matters - language fashions are a broadly disseminated and understood expertise: Papers like this present how language fashions are a category of AI system that may be very nicely understood at this point - there at the moment are numerous teams in countries world wide who have proven themselves able to do finish-to-end improvement of a non-trivial system, from dataset gathering by means of to architecture design and subsequent human calibration. What they constructed - BIOPROT: The researchers developed "an automated approach to evaluating the power of a language mannequin to write biological protocols". POSTSUPERscript until the mannequin consumes 10T coaching tokens. No proprietary information or training methods have been utilized: Mistral 7B - Instruct model is a straightforward and preliminary demonstration that the base model can simply be nice-tuned to realize good efficiency.

In recent times, it has turn out to be best recognized because the tech behind chatbots such as ChatGPT - and DeepSeek - often known as generative AI. Last Updated 01 Dec, 2023 min learn In a recent growth, the DeepSeek LLM has emerged as a formidable pressure in the realm of language models, boasting a powerful 67 billion parameters. Why this matters - language fashions are a broadly disseminated and understood expertise: Papers like this present how language fashions are a category of AI system that may be very nicely understood at this point - there at the moment are numerous teams in countries world wide who have proven themselves able to do finish-to-end improvement of a non-trivial system, from dataset gathering by means of to architecture design and subsequent human calibration. What they constructed - BIOPROT: The researchers developed "an automated approach to evaluating the power of a language mannequin to write biological protocols". POSTSUPERscript until the mannequin consumes 10T coaching tokens. No proprietary information or training methods have been utilized: Mistral 7B - Instruct model is a straightforward and preliminary demonstration that the base model can simply be nice-tuned to realize good efficiency.

However, too giant an auxiliary loss will impair the mannequin performance (Wang et al., 2024a). To realize a greater trade-off between load balance and model efficiency, we pioneer an auxiliary-loss-free load balancing strategy (Wang et al., 2024a) to ensure load steadiness. From this perspective, each token will select 9 consultants throughout routing, where the shared expert is regarded as a heavy-load one that can always be selected. In addition, we add a per-token KL penalty from the SFT model at every token to mitigate overoptimization of the reward model. Finally, the replace rule is the parameter replace from PPO that maximizes the reward metrics in the present batch of data (PPO is on-coverage, which implies the parameters are only updated with the current batch of prompt-technology pairs). This mounted consideration span, means we will implement a rolling buffer cache. In effect, which means that we clip the ends, and perform a scaling computation in the middle. In DeepSeek-V3, we implement the overlap between computation and communication to cover the communication latency during computation. At inference time, this incurs increased latency and smaller throughput resulting from diminished cache availability. As well as, though the batch-smart load balancing methods present consistent efficiency advantages, in addition they face two potential challenges in efficiency: (1) load imbalance within sure sequences or small batches, and (2) domain-shift-induced load imbalance throughout inference.

The analysis outcomes validate the effectiveness of our method as DeepSeek-V2 achieves outstanding efficiency on each normal benchmarks and open-ended era evaluation. By adding the directive, "You want first to write a step-by-step outline and then write the code." following the preliminary immediate, we have now noticed enhancements in performance. Jack Clark Import AI publishes first on Substack DeepSeek makes one of the best coding mannequin in its class and Deep Seek releases it as open source:… Import AI runs on lattes, ramen, and feedback from readers. Made in China might be a factor for AI fashions, same as electric automobiles, drones, and different applied sciences… The clip-off clearly will lose to accuracy of information, and so will the rounding. For extra data, visit the official documentation web page. To incorporate file path info, a remark indicating the file’s path is added at first of every file. Parse Dependency between files, then arrange recordsdata so as that ensures context of every file is before the code of the present file. This observation leads us to consider that the process of first crafting detailed code descriptions assists the model in more successfully understanding and addressing the intricacies of logic and dependencies in coding duties, particularly those of upper complexity.

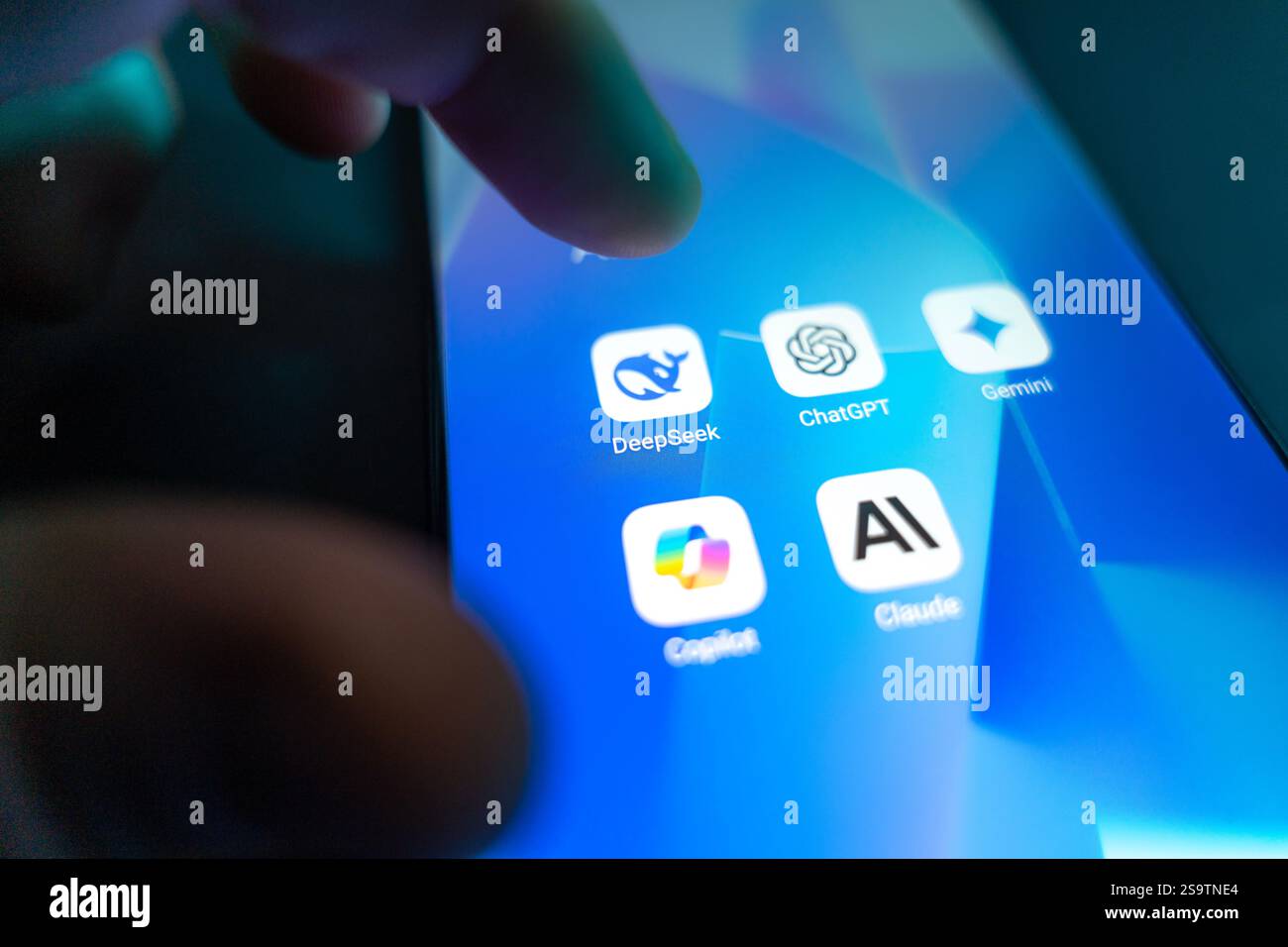

I’m primarily interested on its coding capabilities, and what will be performed to enhance it. Before we start, we want to say that there are an enormous quantity of proprietary "AI as a Service" corporations equivalent to chatgpt, claude and so forth. We solely need to make use of datasets that we will obtain and run domestically, no black magic. Open WebUI has opened up a whole new world of potentialities for me, permitting me to take management of my AI experiences and discover the huge array of OpenAI-compatible APIs out there. This post was extra around understanding some fundamental concepts, I’ll not take this learning for a spin and try out deepseek-coder mannequin. Try the leaderboard right here: BALROG (official benchmark site). Furthermore, existing data editing techniques also have substantial room for enchancment on this benchmark. While the MBPP benchmark consists of 500 problems in a number of-shot setting. What is MBPP ? Note that tokens outside the sliding window nonetheless affect subsequent word prediction. Hence, after k attention layers, information can move ahead by as much as ok × W tokens SWA exploits the stacked layers of a transformer to attend info past the window dimension W . The world is more and more linked, with seemingly limitless quantities of data available throughout the web.