Given the pictures, text command, robot’s state, current timestep, and 50 noisy motion tokens (starting with pure noise), Gemma iteratively removes noise. A user supplies a textual content command, and the robotic uses its sensor inputs to take away noise from a pure-noise motion embedding to generate an acceptable motion. PaliGemma contains SigLIP, a imaginative and prescient transformer that turns photographs into embeddings; a linear layer that adapts the picture embeddings to serve as enter for the pretrained massive language model Gemma; and Gemma, which estimates the noise to be faraway from a robot motion embedding to which noise has been added. They added a vanilla neural network to the enter to show the present timestep into an embedding. A linear layer tasks the resulting embeddings to fit Gemma’s expected input dimension and data distribution. They pretrained π0 to take away noise from action embeddings. After pretraining, the authors superb-tuned π0 to remove noise from action tokens in 15 additional duties, some of which weren't represented within the pretraining set. The opposite is a new set of weights that process robotic action embeddings. For instance, utilizing a single robotic arm to stack a set of bowls of 4 sizes, π0 accomplished about one hundred percent on average.

Given the pictures, text command, robot’s state, current timestep, and 50 noisy motion tokens (starting with pure noise), Gemma iteratively removes noise. A user supplies a textual content command, and the robotic uses its sensor inputs to take away noise from a pure-noise motion embedding to generate an acceptable motion. PaliGemma contains SigLIP, a imaginative and prescient transformer that turns photographs into embeddings; a linear layer that adapts the picture embeddings to serve as enter for the pretrained massive language model Gemma; and Gemma, which estimates the noise to be faraway from a robot motion embedding to which noise has been added. They added a vanilla neural network to the enter to show the present timestep into an embedding. A linear layer tasks the resulting embeddings to fit Gemma’s expected input dimension and data distribution. They pretrained π0 to take away noise from action embeddings. After pretraining, the authors superb-tuned π0 to remove noise from action tokens in 15 additional duties, some of which weren't represented within the pretraining set. The opposite is a new set of weights that process robotic action embeddings. For instance, utilizing a single robotic arm to stack a set of bowls of 4 sizes, π0 accomplished about one hundred percent on average.

Anthropic will prepare its models using Amazon’s Trainium chips, that are designed for coaching neural networks of one hundred billion parameters and up. Anthropic will contribute to growing Amazon’s Neuron toolkit, software that accelerates deep learning workloads on Trainium and Inferentia chips. Previously Anthropic ran its Claude models on Nvidia hardware; going forward, Anthropic will run them on Amazon’s Inferentia chips, in accordance with The knowledge. Amazon executives beforehand claimed that these chips may reduce coaching prices by as much as 50 % compared to Nvidia graphics processing items (GPUs). The company's groundbreaking work has already yielded outstanding results, with the Inflection AI cluster, presently comprising over 3,500 NVIDIA H100 Tensor Core GPUs, delivering state-of-the-art performance on the open-source benchmark MLPerf. It’s a part of an vital movement, after years of scaling models by elevating parameter counts and amassing bigger datasets, towards attaining high efficiency by spending extra power on generating output. Behind the news: DeepSeek-R1 follows OpenAI in implementing this approach at a time when scaling laws that predict increased performance from larger models and/or extra coaching information are being questioned.

Monte-Carlo Tree Search, alternatively, is a method of exploring attainable sequences of actions (in this case, logical steps) by simulating many random "play-outs" and utilizing the outcomes to information the search towards more promising paths. More lately, Google and other tools are now providing AI generated, contextual responses to go looking prompts as the highest result of a question. Interact with LLMs from wherever in Emacs (any buffer, shell, minibuffer, wherever) - LLM responses are in Markdown or Org markup. It presents robust help for varied Large Language Model (LLM) runners, including Ollama and OpenAI-appropriate APIs. Some U.S. officials seem to help OpenAI’s concerns. As a Darden School professor, what do you think this means for U.S. Geopolitical Considerations: DeepSeek’s success challenges U.S. Results: π0 outperformed the open robotics fashions OpenVLA, Octo, ACT, and Diffusion Policy, all of which were nice-tuned on the identical knowledge, on all tasks tested, as measured by a robot’s success rate in completing each process. Skild raised $300 million to develop a "general-function brain for robots." Figure AI secured $675 million to build humanoid robots powered by multimodal fashions. Why it matters: Robots have been sluggish to profit from machine learning, however the generative AI revolution is driving fast innovations that make them far more helpful.

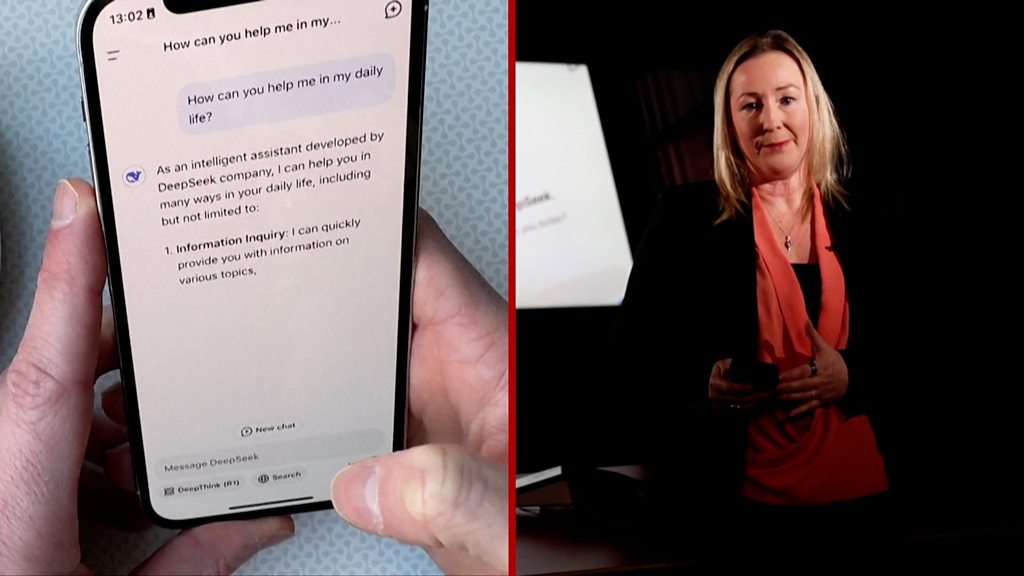

The achievement additionally suggests the democratization of AI by making refined models more accessible to ultimately drive better adoption and proliferations of AI. Large language models have made it potential to command robots using plain English. A new generation of robots can handle some household chores with unusual talent. Household robots will not be right around the nook, however π0 reveals that they will carry out duties that people need achieved. How can I do away with robocalls with apps and knowledge removal providers? While DeepSeek has a number of AI fashions, a few of which will be downloaded and run regionally in your laptop computer, the majority of individuals will likely access the service via its iOS or Android apps or its web chat interface. DeepSeek , the new participant on the scene, is a Chinese firm that has been making huge waves in AI development. The corporate didn't respond to a request for comment. The company additionally announced $400 million in investments from OpenAI, Jeff Bezos, and a number of other Silicon Valley venture capital corporations. Chinese AI company DeepSeek coming out of nowhere and shaking the cores of Silicon Valley and Wall Street was one thing no one anticipated.

If you have any questions regarding where by and how to use DeepSeek AI, you can get in touch with us at the page.