DeepSeek reportedly trained its base mannequin - referred to as V3 - on a $5.Fifty eight million price range over two months, in response to Nvidia engineer Jim Fan. The 2 subsidiaries have over 450 investment merchandise. 50,000 GPUs through various supply routes regardless of commerce barriers (truly, nobody is aware of; these extras may have been Nvidia H800’s, that are compliant with the obstacles and have lowered chip-to-chip transfer speeds). Organizations might have to reevaluate their partnerships with proprietary AI providers, considering whether or not the high costs associated with these companies are justified when open-source options can deliver comparable, if not superior, outcomes. DeepSeek’s ability to attain aggressive outcomes with restricted sources highlights how ingenuity and resourcefulness can challenge the excessive-cost paradigm of training state-of-the-art LLMs. With Monday’s full launch of R1 and the accompanying technical paper, the company revealed a stunning innovation: a deliberate departure from the typical supervised fantastic-tuning (SFT) course of widely used in coaching massive language fashions (LLMs). One query is why there was a lot surprise at the discharge. This bias is often a reflection of human biases found in the information used to prepare AI models, and researchers have put much effort into "AI alignment," the process of attempting to get rid of bias and align AI responses with human intent.

DeepSeek reportedly trained its base mannequin - referred to as V3 - on a $5.Fifty eight million price range over two months, in response to Nvidia engineer Jim Fan. The 2 subsidiaries have over 450 investment merchandise. 50,000 GPUs through various supply routes regardless of commerce barriers (truly, nobody is aware of; these extras may have been Nvidia H800’s, that are compliant with the obstacles and have lowered chip-to-chip transfer speeds). Organizations might have to reevaluate their partnerships with proprietary AI providers, considering whether or not the high costs associated with these companies are justified when open-source options can deliver comparable, if not superior, outcomes. DeepSeek’s ability to attain aggressive outcomes with restricted sources highlights how ingenuity and resourcefulness can challenge the excessive-cost paradigm of training state-of-the-art LLMs. With Monday’s full launch of R1 and the accompanying technical paper, the company revealed a stunning innovation: a deliberate departure from the typical supervised fantastic-tuning (SFT) course of widely used in coaching massive language fashions (LLMs). One query is why there was a lot surprise at the discharge. This bias is often a reflection of human biases found in the information used to prepare AI models, and researchers have put much effort into "AI alignment," the process of attempting to get rid of bias and align AI responses with human intent.

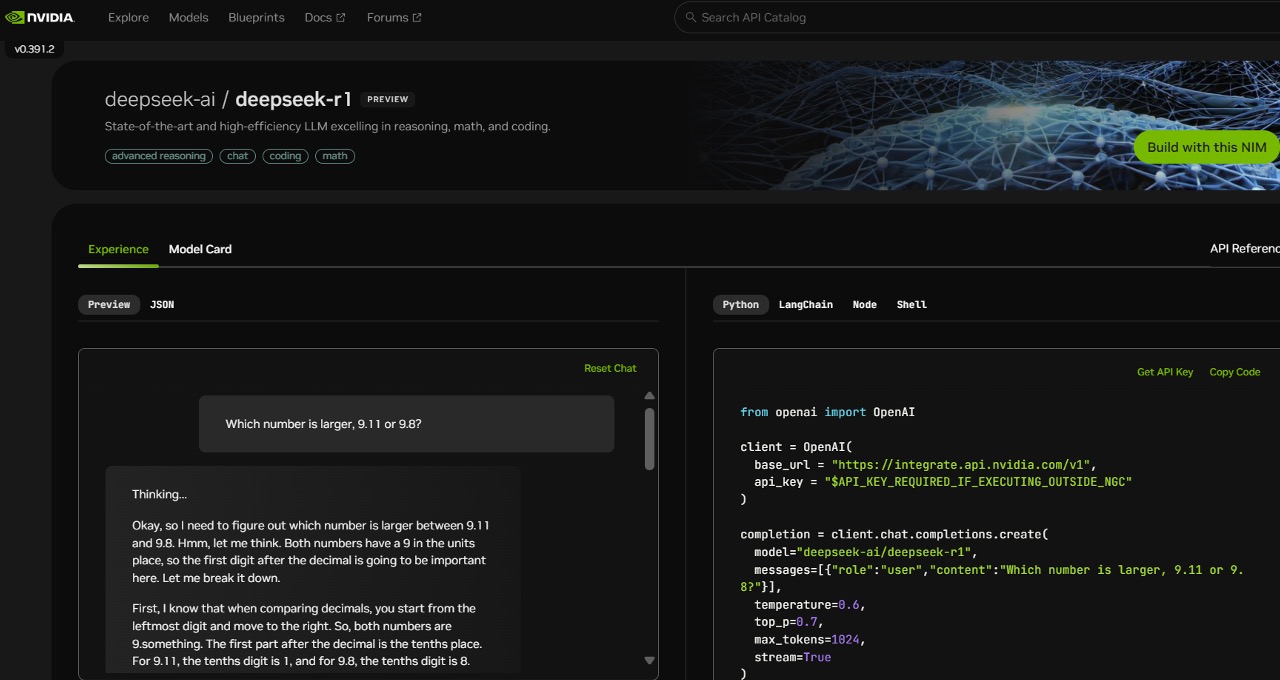

Similarly, DeepSeek-R1 is already getting used to distill its reasoning into an array of other, a lot smaller models - the difference being that DeepSeek provides industry-main efficiency. DeepSeek-R1 not only performs better than the leading open-supply various, Llama 3. It exhibits the whole chain of considered its solutions transparently. While some flaws emerged - leading the crew to reintroduce a restricted quantity of SFT throughout the final phases of building the model - the outcomes confirmed the elemental breakthrough: Reinforcement learning alone might drive substantial performance good points. Last yr, reports emerged about some preliminary improvements it was making, around issues like mixture-of-specialists and multi-head latent attention. Meta’s Llama has emerged as a popular open mannequin despite its datasets not being made public, and despite hidden biases, with lawsuits being filed against it in consequence. Meta’s open-weights mannequin Llama 3, for example, exploded in reputation last yr, because it was tremendous-tuned by developers wanting their own custom fashions. Meta’s Llama hasn’t been instructed to do that as a default; it takes aggressive prompting of Llama to do this. While the corporate hasn’t divulged the exact coaching information it used (aspect word: critics say this means Free DeepSeek Chat isn’t actually open-source), modern methods make coaching on web and open datasets more and more accessible.

Various net initiatives I have put collectively over a few years. This speedy commoditization could pose challenges - certainly, huge pain - for main AI providers that have invested heavily in proprietary infrastructure. Either approach, this pales compared to leading AI labs like OpenAI, Google, and Anthropic, which operate with more than 500,000 GPUs each. This all raises huge questions concerning the investment plans pursued by OpenAI, Microsoft and others. The transparency has also supplied a PR black eye to OpenAI, which has up to now hidden its chains of thought from customers, citing competitive causes and a desire to not confuse customers when a model gets one thing unsuitable. But the DeepSeek growth may level to a path for the Chinese to catch up more rapidly than previously thought. Moreover, they level to totally different, however analogous biases that are held by models from OpenAI and other firms. They do not as a result of they are not the leader. It’s not as if open-source models are new. However, it’s true that the mannequin wanted extra than simply RL.

After greater than a decade of entrepreneurship, that is the first public interview for this rarely seen "tech geek" sort of founder. It was the company’s first AI model launched in 2023 and was trained on 2 trillion tokens across eighty programming languages. This mannequin, once more primarily based on the V3 base model, was first injected with restricted SFT - focused on a "small quantity of long CoT data" or what was known as cold-start data - to fix among the challenges. The journey to DeepSeek-R1’s final iteration started with an intermediate model, DeepSeek-R1-Zero, which was trained utilizing pure reinforcement learning. After that, it was put by the identical reinforcement studying process as R1-Zero. Free DeepSeek Ai Chat challenged this assumption by skipping SFT fully, opting as a substitute to rely on reinforcement studying (RL) to prepare the model. This milestone underscored the facility of reinforcement learning to unlock advanced reasoning capabilities without counting on traditional training strategies like SFT. The code included struct definitions, strategies for insertion and lookup, and demonstrated recursive logic and error handling. Custom-constructed models may need a higher upfront investment, but the lengthy-term ROI-whether or not by means of elevated efficiency, better information-pushed choices, or decreased error margins-is tough to debate. Now that you've determined the purpose of the AI agent, insert the Free Deepseek Online chat API into the system to process enter and generate responses.

Should you cherished this informative article in addition to you want to be given guidance regarding Deepseek Online chat online generously pay a visit to our web site.